library(tidyverse)

#devtools::install_github("mbrussell/stats4nr")

library(stats4nr)

data(elm)

I recently watched the video “Teaching the tidyverse in 2023” by Mine Çetinkaya-Rundel to learn more about recent changes to my favorite R package. I learned quite a bit of new tricks and techniques for my everyday R coding and sharing a few of them here.

I’ll use the elm data set from the stats4nr package as I work through a few examples in the post. This data set contains observations on 333 cedar elm trees (Ulmus crassifolia Nutt.) measured in Austin, Texas:

Here are a few of the key points I took away from the video.

The native pipe works.

R has a native pipe denoted as |>. The pipe is shorthand for saying “then.” In other words, you can say “use my data frame, then make a new variable in it.” Now, the native pipe is a part of the the tidyverse.

If you’re a longtime user of the tidyverse, you might have been using %>%. Now, the pipe integrates with base R. For example, the code:

elm |>

summary()provides the same output as:

elm %>%

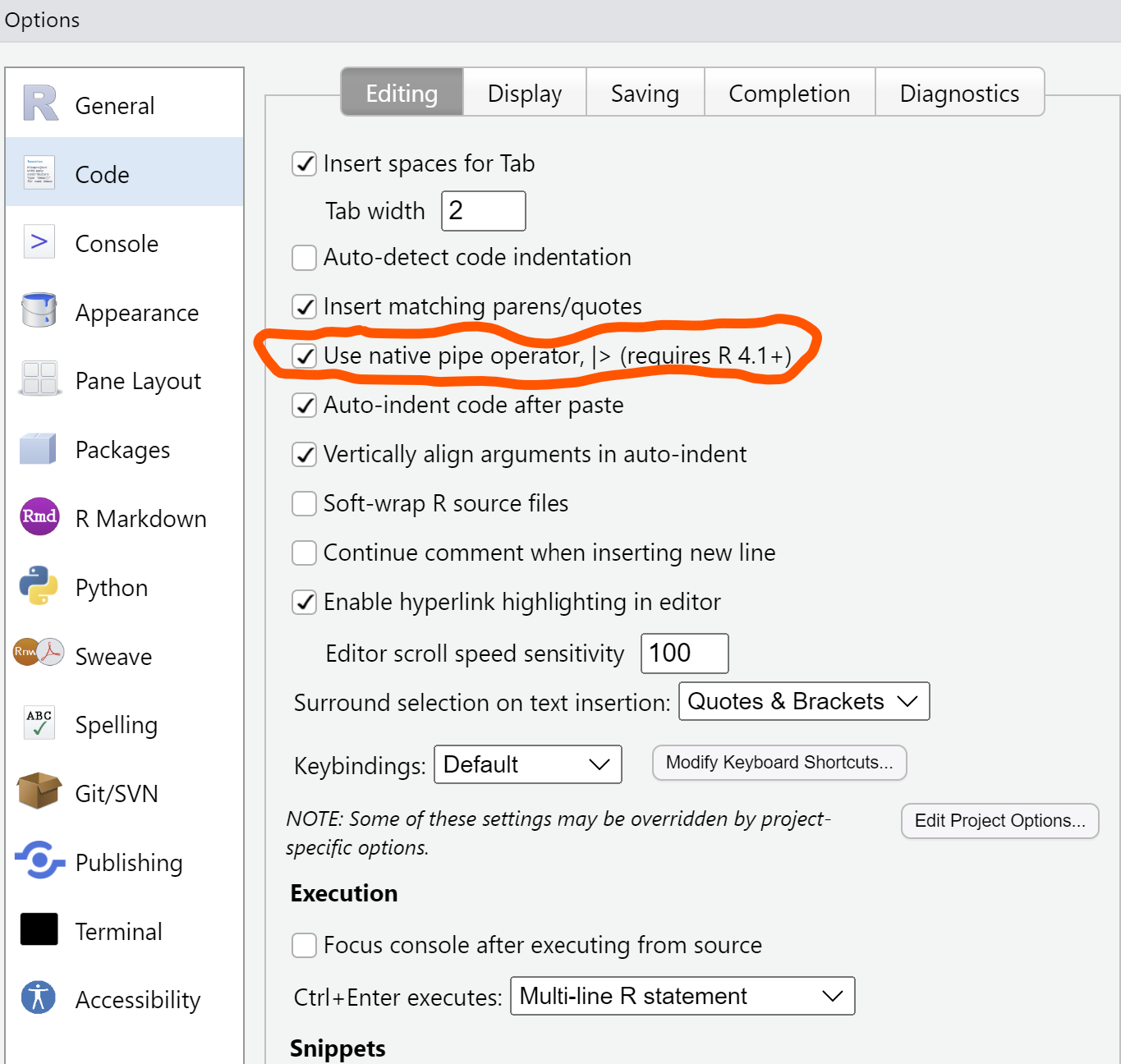

summary()If you’re using RStudio, you can change the setting to use the native pipe by going to Tools -> Global Options, -> Code:

The lubridate package is now a part of the core tidyverse.

If your data contain a lot of dates and times, the lubridate package is your best friend. This package is now a part of the tidyverse and no longer needs to be called separately.

The lubridate package has several functions for working with date and time variables. For example, we can change strings of dates into year-month-date formats with the ymd() or dmy() functions:

my_date <- "20230914"

my_date2 <- "14092023"

ymd(my_date)[1] "2023-09-14"dmy(my_date2)[1] "2023-09-14"Easy ways to use a function in one package that’s named the same in another package

Several packages in R use functions with the same name, which can present problems when you tell R to use a function. You will often see this as a warning when you first load a library into your R session when there are functions of the same name from other packages. As Mine mentions in her post, R will often silently choose the function from a package to use.

For example, the lag() function is available in both dplyr and the base R stats package. If we wanted to explicitly tell R to use this function from the dplyr package, we could write dplyr::lag(). But that could be repetitive if we use that function multiple times in our data analysis.

Now with the conflicts_prefer() function from the conflicted package, you can tell R to use a specific function once, then forget about it for the rest of your analysis. For example,

library(conflicted)

conflicts_prefer(dplyr::lag)[conflicted] Will prefer dplyr::lag over any other package.This will choose the lag() function from dplyr. For example, say we wanted to use it to lag the tree diameter measurement in the elm data set:

elm |>

mutate(DIA2 = lag(DIA)) |>

select(DIA, DIA2)# A tibble: 333 × 2

DIA DIA2

<dbl> <dbl>

1 5 NA

2 5 5

3 5.1 5

4 5.1 5.1

5 5.1 5.1

6 5.1 5.1

7 5.2 5.1

8 5.2 5.2

9 5.2 5.2

10 5.2 5.2

# ℹ 323 more rowsNew updates to joining variables

It seems like joining data sets is one of the most common tasks I do in my work, and tidyverse has new ways of doing this. The join_by() function can take the place of the by = statements. The good thing is there is no need to quote variable names anymore in the by() statement!

For example, say we have a data set that contains the codes for all open grown trees in the elm data set:

crown_class <- tribble(

~crown_code, ~CROWN_CLASS_NAME,

1, "Open grown",

2, "Not open grown",

3, "Not open grown",

4, "Not open grown",

5, "Not open grown"

)Then we could join this to the elm data set. What I love about join_by() is there’s no need to have the variable name the same in both data sets that you want to merge by. Note that the code indicating crown class is named CROWN_CLASS_CD in the elm data set and crown_code in the crown_class data set. It can be joined by expressing the double equal sign ==:

elm |>

left_join(crown_class, join_by(CROWN_CLASS_CD == crown_code)) |>

select(DIA, HT, CROWN_CLASS_CD, CROWN_CLASS_NAME)# A tibble: 333 × 4

DIA HT CROWN_CLASS_CD CROWN_CLASS_NAME

<dbl> <dbl> <dbl> <chr>

1 5 32 4 Not open grown

2 5 25 5 Not open grown

3 5.1 21 5 Not open grown

4 5.1 27 3 Not open grown

5 5.1 22 3 Not open grown

6 5.1 27 5 Not open grown

7 5.2 29 3 Not open grown

8 5.2 20 4 Not open grown

9 5.2 18 3 Not open grown

10 5.2 17 3 Not open grown

# ℹ 323 more rowsThe join functions also have more ways to identify unmatched rows in your data. If this is of interest to you, explore the unmatched = “drop” and unmatched = “error” statements.

New function to group variables

A typical summary operation with dplyr code to obtain a mean and standard deviation of a variable might go something like:

elm |>

group_by(CROWN_CLASS_CD) |>

summarize(mean_DIA = mean(DIA),

sd_DIA = sd(DIA))# A tibble: 5 × 3

CROWN_CLASS_CD mean_DIA sd_DIA

<dbl> <dbl> <dbl>

1 1 7.5 3.29

2 2 17.6 7.56

3 3 10.9 5.10

4 4 7.77 2.72

5 5 7.09 2.04Now, you can add a .by statement to add the grouping variable within the same call:

elm |>

summarize(

mean_DIA = mean(DIA),

sd_DIA = sd(DIA),

.by = CROWN_CLASS_CD

)# A tibble: 5 × 3

CROWN_CLASS_CD mean_DIA sd_DIA

<dbl> <dbl> <dbl>

1 4 7.77 2.72

2 5 7.09 2.04

3 3 10.9 5.10

4 1 7.5 3.29

5 2 17.6 7.56I’m not sure how much this new technique will help in my analysis, but it does make sense to keep the functions within the same call. This may be particularly useful if there are more operations happening after the grouping.

New function to split columns and rows

New functions are available to help separate columns and rows into multiple columns or rows. For example, say we have a variable in the elm data set that separates the diameter and height measurements with a comma:

elm |>

mutate(DIA_HT = paste0(DIA, ",", HT)) |>

select(DIA, HT, DIA_HT)# A tibble: 333 × 3

DIA HT DIA_HT

<dbl> <dbl> <chr>

1 5 32 5,32

2 5 25 5,25

3 5.1 21 5.1,21

4 5.1 27 5.1,27

5 5.1 22 5.1,22

6 5.1 27 5.1,27

7 5.2 29 5.2,29

8 5.2 20 5.2,20

9 5.2 18 5.2,18

10 5.2 17 5.2,17

# ℹ 323 more rowsThe separate_wider_delim() function separates columns into multiple one based on a delimiter. You can then rename the variables directly in the names = statement:

elm |>

mutate(DIA_HT = paste0(DIA, ",", HT)) |>

separate_wider_delim(DIA_HT,

delim = ",",

names = c("DIA2", "HT2")) |>

select(DIA2, HT2)# A tibble: 333 × 2

DIA2 HT2

<chr> <chr>

1 5 32

2 5 25

3 5.1 21

4 5.1 27

5 5.1 22

6 5.1 27

7 5.2 29

8 5.2 20

9 5.2 18

10 5.2 17

# ℹ 323 more rowsThis can be a handy function, particularly for those that work with character strings that need to split them up into component parts. Note that the original variable that you separate (i.e., the DIA_HT variable) is dropped after you use the function.

Conclusion

Check out these new features in the tidyverse and try them in your own analysis. These new features are particularly well adapted for new learners of R/the tidyverse given they make more intuitive sense (and speed up performance). Let me know if any of the new techniques presented here help in your own data analysis.

–

By Matt Russell. Email Matt with any questions or comments.